#Using reticulate to run python LangChain code in R

# install.packages("reticulate")

# Import the reticulate library

library(reticulate)

#This tells us which python version to use

#This is only necessary if you have multiple installations of python on your computer

#You will need to make sure python is setup properly with all dependencies in the below python code installed

#'pip install langchain', 'pip install os', etc. in system command line

use_python("C:/Users/user/AppData/Local/Programs/Python/Python310/python.exe")

#We find all file paths for txt files in a folder

#We can then iterate over all the papers with our LangChain code

# specify the directory

directory <- "PUT YOUR FILE DIRECTORY HERE"

# get all txt files

paper_fp <- list.files(path = directory, pattern = "*.txt", full.names = TRUE)

# print all txt filepaths

print(paper_fp)

#Start a timer

start_time <- Sys.time()Automating Paper Screening For Systematic Reviews: A Game of 20 Questions (Part III)

TLDR: AI can help filter out irrelevant scientific papers based on screening criteria. Some prompt formats work better than others. Single prompts often produce error-filled responses but using multiple prompts/responses for screening works well.

This is Part III on a series about applying AI for data science on scientific articles. See Part I: Virology Data Science with LangChain + ChatGPT and Part II: Automating Literature Searches in R. Stay tuned for future posts where I put it all together.

Intro

If you’ve been following along, I am building a pipeline leveraging AI for automated analysis of scientific texts. In this post, I am testing the ability of large language models (LLMs) like ChatGPT to screen papers based on specific criteria - an essential (and laborious) step for systematic reviews.

Screening papers is critical for ensuring the relevance and quality of the included studies, excluding those that do not meet pre-defined inclusion criteria. For huge studies that use necessarily broad search terms to capture papers, a large number of papers returned are completely irrelevant. Naturally, automation can assist in this initial deliberation but humans should be kept in the loop for final decisions on study inclusion.

In our last post, I used a combination of the R packages litsearchr and ZoteroR to automate our acquisition of papers and save them to the web-synced Zotero app. Shout out to the creator of litsearchr, Dr. Eliza Grames. Dr. Grames has extensively worked on methods for open-source systematic review/literature mining/evidence synthesis, creating EntoGEM - “a community-driven project that aims to compile evidence about global insect population and biodiversity status and trends”. Much like a lot of the work from Verena I touched on in my first post, it involves harmonization of multiple data streams, careful curation of metadata, and screening of scholarly articles. We’ll see if current LLMs can expedite the paper screening process for large projects like these.

Workflow

Here, we use a series of prompts to determine if a paper is suitable for inclusion in our downstream analysis. Using the least amount of prompts/API calls, we need to assess the suitability of the paper. If we can’t conclusively accomplish this with the LLM, we need to at least flag the paper for further manual review.

Let’s jump back to our mosquito and virus paper examples from Dr. Colin Carlson’s group.

Here’s the section on screening:

“We performed an initial screen of records based on abstracts, excluding reviews, methodology descriptions, and studies with no experimental infection (n = 135), studies with non-virus infections, insect-specific infections, infection regimes with confounding treatments (i. E. , coinfection with wolbachia or insect-specific viruses; n = 71), studies involving infection in non-mosquitoes, experimental vector infection without any reported results describing competence quantitatively or ex vivo data (n = 55), and studies fitting multiple exclusion criteria (n = 22).”

The criteria for inclusion we evaluate here is the presence of experiments involving BOTH mosquitoes and viruses where mosquitoes are infected.

We are going to use papers identified in the original study dataset as positives that should pass selection checks. For negatives, I searched around to find mosquito-only papers, virus-only papers, and random subjects. I’ve included the DOIs of those papers way below in the results section. At the end, we’ll inspect the results and see how the screening prompts did.

Designing the prompts for the screening steps, I’ve used a mix of multiple-choice and yes-no questioning. I also tried asking the model to format the response in different ways such as assigning a likelihood score based on a statement.

| Questions | |

|---|---|

| 1 | Classify this text into one of the following groups: 1. an experiment involving mosquitoes is performed, 2. experiments are performed but none involving mosquitoes, or 3. no experiments are performed. Return only the number of the group that most fits as a separate line inside of brackets. I need strict adherence to this format, please. |

| 2 | Classify this text into one of the following groups: 1. an experiment involving mosquitoes is performed with viruses, 2. experiments are performed involving mosquitoes but none with viruses, or 3. no experiments are performed involving mosquitoes. Return only the number of the group that most fits as a separate line inside of brackets. I need strict adherence to this format, please. |

| 3 | Someone indicated an experiment involving mosquitoes and viruses is performed in the text. Return TRUE if this is accurate. Otherwise, return FALSE. I need strict adherence to this format, please. Value: |

| 4 | Someone indicated an experiment involving mosquitoes and viruses is performed in the text. Return a numerical score between 0 and 100 that reflects how likely it is this statement is true. I need strict adherence to this format, please. Score: |

| 5 | Do the authors infect mosquitoes with viruses in the text? Return TRUE if they did. Otherwise, return FALSE. I need strict adherence to this format, please. Value: |

| 6 | Someone indicated that this text serves as a primary source for an experiment that was conducted by the authors. Return a numerical score between 0 and 100 that reflects how likely it is this statement is true. I need strict adherence to this format, please. Score: |

| 7 | Find an example of an experiment involving both mosquitoes-infected viruses performed in this text. If there is none, return FALSE. I need strict adherence to this format, please. Value: |

Code

Let’s look at that R code:

The reticulate package lets us use LangChain code written in Python in the R environment. Sure, we could have simply run the Python script separately. However, this keeps me from having to leave R, integrating seamlessly with the litsearchr workflow from the last post. We can go directly from an auto-updating stream of papers to screening them.

#Initial setup of the LangChain components

py_run_string('

import os

os.environ["OPENAI_API_KEY"] = "PUT YOUR API KEY HERE"

from langchain.chat_models import ChatOpenAI

from langchain import OpenAI

from langchain.embeddings.openai import OpenAIEmbeddings

from langchain.chains import RetrievalQA

from langchain.vectorstores import Chroma

from langchain.text_splitter import TokenTextSplitter

from langchain.prompts.chat import (

ChatPromptTemplate,

SystemMessagePromptTemplate,

HumanMessagePromptTemplate,

)

from langchain.document_loaders import UnstructuredFileLoader

llm = ChatOpenAI(

model_name="gpt-3.5-turbo",

temperature=0,

request_timeout=120)

embeddings = OpenAIEmbeddings()

system_template = """

you are an assistant with expertise in virology designed to identify experimental details from scientific texts

###################

Text:

{context}

"""

messages = [

SystemMessagePromptTemplate.from_template(system_template),

HumanMessagePromptTemplate.from_template("{question}")

]

prompt = ChatPromptTemplate.from_messages(messages)

chain_type_kwargs = {"prompt": prompt}

print("PASS")

')This sets up LangChain for the multiple successive prompts later. It loads the model, embeddings, and prompt template. We put a printed PASS to make sure it runs smoothly. Additionally, splitting the code between a setup stage and the prompting avoids redundant re-running of the setup for each prompt.

screen_paper <- function(filepath) {

#Read in the paper local file path

rawtext=readLines(filepath)

filtext=rawtext[rawtext!=""]

paper_txt <- paste(filtext,collapse = "\n\n ")

#Need to add some error catching for weirdly formatted or empty txt files

#Pass the R variable to Python

py_run_string(paste0('paper_txt = """',paper_txt,'"""'))

#Call the LLM and collect the response

py_run_string('

from langchain.docstore.document import Document

from langchain.text_splitter import CharacterTextSplitter

text_splitter = CharacterTextSplitter(chunk_size=800, chunk_overlap=50)

texts = text_splitter.split_text(paper_txt)

documents = [Document(page_content=t) for t in texts[:3]]

vectorstore = Chroma.from_documents(documents, embeddings)

# create Q+A chain

qa = RetrievalQA.from_chain_type(

llm=llm,

chain_type="stuff",

retriever=vectorstore.as_retriever(search_kwargs={"k": 1}),

chain_type_kwargs=chain_type_kwargs,

return_source_documents=False)

print("PASS")

')

response = py_run_string('

def callAttempt(query, max_attempts=3, wait_time=15, wt_increment=30):

# Result variable

id_result = None

# Retry attempts

attempts = 0

while attempts < max_attempts:

try:

# Attempt to get the result

id_result = qa({"query": query})["result"]

# If the operation is successful, break the loop

break

except Exception as e:

print(f"An error occurred: {str(e)}")

attempts += 1

# Wait before the next attempt

print(f"Waiting for {wait_time} seconds before retrying...")

time.sleep(wait_time)

# Increase the wait time

wait_time += wt_increment

# Return the result

return id_result

# Usage

# Consider adding wait times between each call - may improve RateLimitError

query = "Classify this text into one of the following groups: 1. an experiment involving mosquitoes is performed, 2. experiments are performed but none involving mosquitoes, or 3. no experiments are performed. Return only the number of the group that most fits as a separate line inside of brackets. I need strict adherence to this format, please."

id_result1 = callAttempt(query=query)

query = "Classify this text into one of the following groups: 1. an experiment involving mosquitoes is performed with viruses, 2. experiments are performed involving mosquitoes but none with viruses, or 3. no experiments are performed involving mosquitoes. Return only the number of the group that most fits as a separate line inside of brackets. I need strict adherence to this format, please."

id_result2 = callAttempt(query=query)

query = "Someone indicated an experiment involving mosquitoes and viruses is performed in the text. Return TRUE if this is accurate. Otherwise, return FALSE. I need strict adherence to this format, please. Value:"

id_result3 = callAttempt(query=query)

query = "Someone indicated an experiment involving mosquitoes and viruses is performed in the text. Return a numerical score between 0 and 100 that reflects how likely it is this statement is true. I need strict adherence to this format, please. Score:"

id_result4 = callAttempt(query=query)

query = "Do the authors infect mosquitoes with viruses in the text? Return TRUE if they did. Otherwise, return FALSE. I need strict adherence to this format, please. Value:"

id_result5 = callAttempt(query=query)

query = "Someone indicated that this text serves as a primary source for an experiment that was conducted by the authors. Return a numerical score between 0 and 100 that reflects how likely it is this statement is true. I need strict adherence to this format, please. Score:"

id_result6 = callAttempt(query=query)

query = "Find an example of an experiment involving both mosquitoes-infected viruses performed in this text. If there is none, return FALSE. I need strict adherence to this format, please. Value:"

id_result7 = callAttempt(query=query)

')

extract_before_dot <- function(string) {

split_string <- strsplit(string, "\\.", fixed = TRUE)

return(split_string[[1]][1])

}

result = tryCatch({

id1 = py_to_r(response)$id_result1

id2 = py_to_r(response)$id_result2

id3 = py_to_r(response)$id_result3

id4 = py_to_r(response)$id_result4

id5 = py_to_r(response)$id_result5

id6 = py_to_r(response)$id_result6

id7 = py_to_r(response)$id_result7

llm_row=c(id1,id2,extract_before_dot(id3),extract_before_dot(id4),id5,extract_before_dot(id6),id7)

return(llm_row)

}, error = function(e) {

# Handle the error here

errorMessage <- conditionMessage(e)

# Print or log the error message

Sys.sleep(60)

# ...

return(cat("An error occurred:", errorMessage))

})

return(result)

}

#Run the python code for all papers in our folder and coerce it to a dataframe

screen_df=data.frame()

for(i in paper_fp){

holder = screen_paper(i)

if(grepl("An error occurred:", holder)){

holder = c(i,NA,NA,NA,NA,NA,NA,NA)

holder = as.data.frame(holder)

colnames(holder) = c("fp","c1","c2","c3","c4","c5","c6","c7")

screen_df = rbind(screen_df,holder)

}else{

holder = as.data.frame(t(c(i, holder)))

colnames(holder) = c("fp","c1","c2","c3","c4","c5","c6","c7")

screen_df = rbind(screen_df,holder)

}

}

# End the timer and calculate the difference

end_time <- Sys.time()

time_taken <- end_time - start_time

# Print the execution time

print(time_taken)A bunch here. We’ve made the process of asking our prompts for each paper a function. The paper text (saved on our local machine in this case) is read in from R and passed to Python. Our document store then splits up that text and stores it in a Chroma vector database. Bits that are considered relevant from the text will be added to the prompt to the LLM, hopefully allowing for accurate document answering. There are error catchers littered through the code. In cases where the ChatGPT API is down or we hit the prompt rate limit, this keeps the code running despite errors being thrown. The last bit is simply combining all the results in a dataframe called screen_df.

Evaluating Results

See the questions again

| Questions | |

|---|---|

| 1 | Classify this text into one of the following groups: 1. an experiment involving mosquitoes is performed, 2. experiments are performed but none involving mosquitoes, or 3. no experiments are performed. Return only the number of the group that most fits as a separate line inside of brackets. I need strict adherence to this format, please. |

| 2 | Classify this text into one of the following groups: 1. an experiment involving mosquitoes is performed with viruses, 2. experiments are performed involving mosquitoes but none with viruses, or 3. no experiments are performed involving mosquitoes. Return only the number of the group that most fits as a separate line inside of brackets. I need strict adherence to this format, please. |

| 3 | Someone indicated an experiment involving mosquitoes and viruses is performed in the text. Return TRUE if this is accurate. Otherwise, return FALSE. I need strict adherence to this format, please. Value: |

| 4 | Someone indicated an experiment involving mosquitoes and viruses is performed in the text. Return a numerical score between 0 and 100 that reflects how likely it is this statement is true. I need strict adherence to this format, please. Score: |

| 5 | Do the authors infect mosquitoes with viruses in the text? Return TRUE if they did. Otherwise, return FALSE. I need strict adherence to this format, please. Value: |

| 6 | Someone indicated that this text serves as a primary source for an experiment that was conducted by the authors. Return a numerical score between 0 and 100 that reflects how likely it is this statement is true. I need strict adherence to this format, please. Score: |

| 7 | Find an example of an experiment involving both mosquitoes-infected viruses performed in this text. If there is none, return FALSE. I need strict adherence to this format, please. Value: |

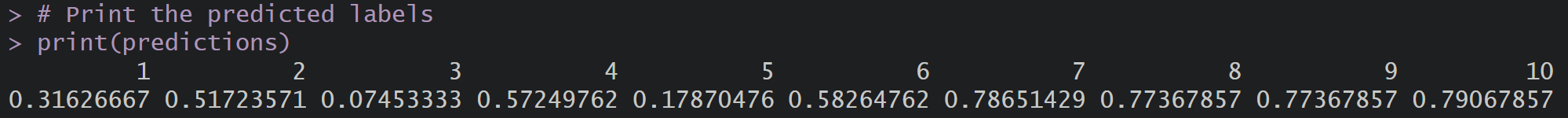

Let’s see the results of our prompting:

There are clearly inconsistencies between responses to these different prompts. Additionally, no single prompt was entirely accurate. Testing out the first couple, It became clear that using multiple prompts would be necessary to improve screening accuracy. With these results, the approach switched from relying on the prompt responses directly to using prompt responses as variables for tried and true predictive models. I briefly cleaned up the response outputs to boolean or dummy-coded variables before doing this (this can be done automatically with regular expressions).

Using prompt responses as variables for logistic regression:

# Build the logistic regression model

model <- glm(label ~ c1 + c2 + c3 + c4 + c5 + c6 + c7, data = screen_df, family = "binomial")

# Make predictions on the new data

predictions <- predict(model, newdata = screen_df2, type = "response")

# Convert predicted probabilities to binary labels (0 or 1) using a threshold (e.g., 0.5)

binary_predictions <- ifelse(predictions >= 0.5, 1, 0)

# Print the predicted labels

print(binary_predictions)Using prompt responses as variables for random forest (I realize this training dataset is too small for this to be optimal):

library("randomForest")

# Train the random forest model

model <- randomForest(label ~ c1 + c2 + c3 + c4 + c5 + c6 + c7, data = screen_df)

# Analyze variable importance

var_importance <- importance(model)

print(var_importance)

varImpPlot(model)

# Make predictions on the new data

predictions <- predict(model, newdata = screen_df2)

# Print the predicted labels

print(predictions)Looking at the importance of each variable to the model produced this plot:

Performing “cross-validation”. It’s just ten more papers, five negatives followed by five positives. I chose the negatives to be difficult to distinguish.

With a cutoff of 0.5 we misclassify two negatives. The two misclassified both include the words mosquito and virus in the text. One involves experiments with a mosquito-infecting virus, Chikungunya, in human cells (2). The other has virus-infected mosquitoes (4 - uses PCR to detect viruses in natural mosquito populations) but not mosquitoes experimentally infected with viruses. We had a small training set and could have refined the prompts to account for these examples. For immediate adjustment, we could adjust the cutoff value or set a flag zone for values approaching the cutoff. Depending on the nature of your systematic review, it may be better to have a low cutoff and let irrelevant papers slip through rather than being too stringent. It really depends on your goals and how related papers are available on your topic.

Other Details

Text Cleaning

Data cleaning is often a time-intensive task for many data science workflows but it is remarkably straightforward in this use case. We saw in the past that including References sections from scientific articles can negatively impact our results. I did not find a simple, consistent way to remove this section although it is likely possible. We can’t just split the text whenever the word References is used as the authors may use it in the main body of the text. For the purposes of this experiment, I simply copy-pasted everything but the references. Using an LLM with a large context window may actually solve this issue in the future.

Prompting

The prompts are key to this entire process. Spending time evaluating your prompts is a worthwhile investment for this task. Some formats worked while others failed spectacularly. Asking the LLM to summarize the text in QA mode was not effective (using the prompt “In two sentences, summarize the methodologic approaches used in the text. Summary:”). Answers were inconsistent, seemingly due to the text-splitting Chroma DB step.

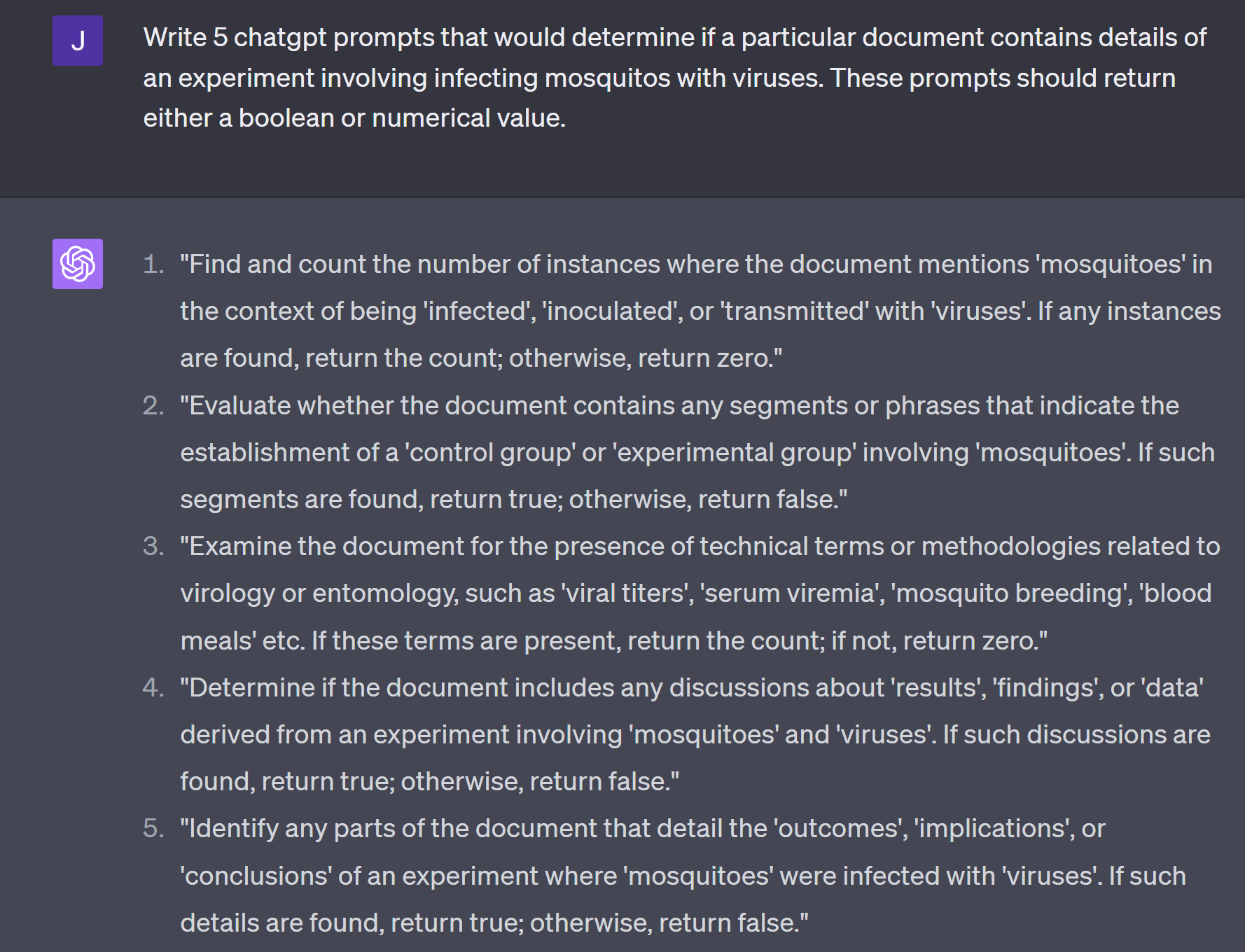

Developing additional tools to evaluate prompt variables may be a good time investment. You can use ChatGPT itself to generate prompts for screening variables. Here are some ChatGPT-4 generated prompts below:

With the code I wrote as a framework, we could build an application to scale evaluation of user and AI-generated prompts on test data, outputting measures of variable importance and performance. With several iterations of this, we should be able to optimize prompts and ultimately screening accuracy.

Fine-Tuning

Fine-tuning a model was an option for trying to improve screening accuracy. Fine-tuning would provide extensive training data to a pre-existing model (like chat-gpt-4) to specialize in a specific task or domain (in our case, paper screening). However, it seems like considerably more work to perform. I may revisit fine-tuning if this current approach performs too poorly for specific inclusion criteria.

Chain Types

LangChain provides different chain types that are designed to work for different tasks. We are currently using the RetrievalQAChain chain which allows us to ask questions about the specific paper. However, I also tested ConversationalRetrievalChain which provides the chat history (past prompts with answers) to the LLM. There was no discernable improvement with this chain so I quickly abandoned it. Being creative with these chain types may improve screening - I simply did not find an obvious application for many of the more complicated ones.

Cost

When iterating this code through a huge number of papers, the cost incurred by API calls quickly becomes a concern. There are two ways to address this: use free models and use less questions. Throughout testing, I tried this workflow with many other open-source, free models from Hugging Face or GPT4All. In their current state, none worked, typically producing nonsensical answers. It seems the kind of zero-shot, document question answering we are trying to perform is currently limited to state-of-the-art models. That being said, use of the ChatGPT gpt-3.5-turbo model is pretty cheap. Making 70 calls (7 questions for 10 papers) to the API using this model was $0.04 - 1000 papers screened for $4.00. Once the inevitable open-sourcing of powerful models happens, we can switch to free models or mix their capabilities with paid models. Gorilla just came out which could be a promising alternative.

Error Catching

In order to make this script robust to potential errors, additional error-catching and unit tests should be developed. What if the text file has a special character? What if the response is formatted incorrectly? What if the API is down? What if we hit the API limit? These are but a sampling of ways our code can fail us! Querying ChatGPT helped me put some generic error handlers to deal with some of these cases.

After this initial screening, we can perform additional screening steps based on the information we’ve gleaned or proceed to feature/variable extraction.

Final Thoughts

Using the ChatGPT gpt-3.5-turbo model, we got promising results at an affordable rate. Fortunately, this framework will see multiple improvements with newer models, longer context lengths, and other improvements surrounding AI document question answering. While we can’t currently rely on single prompts to screen papers, combining multiple prompts and using responses as predictive variables is an effective approach. These variables can also be integrated with existing natural language processing models or non-AI approaches already in use by organizations for screening, improving accuracy. Because of the limited nature of current training data, estimates on the upper ceiling for classification accuracy are skewed. However, I’ll have a more robust example in a future post when I finally piece the workflow components together.