#This is LangChain code to extract information from virology papers in a structured format

#This is heavily derived from code by Martin Zirulnik

#https://www.mziru.com/

import os

os.environ["OPENAI_API_KEY"] = "YOURPRIVATEKEY"

import json

from langchain.chat_models import ChatOpenAI

from langchain import OpenAI

from langchain.embeddings.openai import OpenAIEmbeddings

from langchain.chains import RetrievalQA

from langchain.document_loaders import UnstructuredURLLoader, SeleniumURLLoader

from langchain.vectorstores import Chroma

from langchain.text_splitter import CharacterTextSplitter

from langchain.prompts.chat import (

ChatPromptTemplate,

SystemMessagePromptTemplate,

HumanMessagePromptTemplate,

)

#llm = ChatOpenAI(

# model_name='gpt-3.5-turbo',

# temperature=0,

# request_timeout=120)

from langchain.llms import OpenAI

llm = OpenAI(model_name="text-davinci-003")

embeddings = OpenAIEmbeddings()

text_splitter = CharacterTextSplitter(

chunk_size=1000,

chunk_overlap=0)

#file_path = "C:/Users/____/Downloads/elife-69091-v3.pdf"

#loader = UnstructuredPDFLoader(file_path= [file_path])

#abbrev_file_path = file_path.split("/")[-1]

url = 'https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0182386'

url = 'https://academic.oup.com/jme/article/51/3/661/901264'

# use this loader for html content

#loader = UnstructuredURLLoader(urls=[url])

loader = SeleniumURLLoader(urls=[url])

# load and chunk webpage text

web_doc = loader.load()

web_docs = text_splitter.split_documents(web_doc)

#from langchain.document_loaders import PyPDFLoader

#loader = PyPDFLoader(file_path)

#web_docs = loader.load_and_split()

# create Chroma vectorstore

webdoc_store = Chroma.from_documents(

web_docs,

embeddings,

collection_name="web_docs")

system_template = """

you are an assistant with expertise in virology designed to identify experimental details from scientific texts

----------------

{context}"""

messages = [

SystemMessagePromptTemplate.from_template(system_template),

HumanMessagePromptTemplate.from_template("{question}")

]

prompt = ChatPromptTemplate.from_messages(messages)

chain_type_kwargs = {"prompt": prompt}

# create Q+A chain

qa = RetrievalQA.from_chain_type(

llm=llm,

chain_type="stuff",

retriever=webdoc_store.as_retriever(),

chain_type_kwargs=chain_type_kwargs,

return_source_documents=False)

query = """

Find all vector competence experiments performed in this document.

For each virus tested, identify the strains of all tested viruses,

tested mosquito species, and experiment type. Provide a brief summary of the findings.

Output should be STRICT JSON, containing

a dictionary containing the website url, and

a list of dictionaries containing the information for each tested virus

formatted like this:

[

{"url": str},

[

{

"virus_tested": str,

"virus_strain": str,

"mosquito_species": str,

"experiment_type": str,

"brief_summary": str

}

]

]

""" + f"""

here is the url: {url}

"""

id_result = qa({"query": query})['result']

data = json.loads(id_result)

print(json.dumps(data, indent=2))Virology Data Science with LangChain + ChatGPT (Part I)

TLDR: Sort of works, maybe needs more prompt engineering and to be fed cleaner data. R + LangChain provides a straightforward workflow for studies like these.

Intro

A while back, this study from Colin Carlson’s group (part of the larger Verena group that does amazing work bringing contemporary AI and machine learning to pandemic preparedness) looked at studies of vector competence between virus and mosquito pairs. They created a database of virus mosquito pairs validated by experimental work by surveying the literature, characterizing trends and gaps.

Research like this study will greatly benefit from AI advances - but is AI there yet? Newer models of ChatGPT demonstrate better performance on tasks like document question-answering, summarization, and extraction. With LangChain, individual papers can be evaluated with a sequence of queries to try and extract useful info. I’ve been tinkering with LangChain to see if ChatGPT in its current form is capable of accurately extracting vector competence information from scientific papers.

Workflow

The first step of replicating such an experiment would be the literature search. At this point in time, it’s still preferable to curate and find relevant articles semi-manually without the assistance of an AI agent. You gain a lot of insight by performing these steps yourself and frankly, you still want to have human eyes on these papers to make sure you’re capturing relevant information. That being said, there are many non-AI tools that greatly expedite this process (like litsearchr in R).

While I haven’t implemented it, a workflow using Zotero could manage citations found during the literature search, sync the collection for multiple users, and provide access to files locally. I cannot plug Zotero as a citation manager enough. The Zotero Connector web browser plugin makes saving articles to collections grouped around a topic extremely simple, often downloading the PDF locally as well. Beyond scholarly articles, I use this to save webpages, videos, and other content for any topic I’m interested in and I find it to be the application that works best for me as a second brain/Zettelkasten. Zotero’s API also allows programmatic adding or editing of citations as well, another area of potential automation. In R, there are a few packages that wrap Zotero’s API or you could use the classic combo of httr and jsonlite.

Code

From here, you should have identified a corpus of papers, either as URLs or PDFs. For the below code, I use URLs (from the paper’s dataset) but using locally hosted PDFs is a necessity for this to work on closed access papers. I found that using the PDF loader caused different (worse) results than using the URL loader.

I’ve decided to ask the LLM to output the results in JSON format. JSON is flexible and can easily be changed to a workable format in R through the jsonlite package. With the magic of reticulate, you don’t even need to leave R to run the python code.

Here’s the LangChain code (python). If you are entirely new to this but want to tinker with it, you will need to download python (also recommend Visual Studio Code), locate your OpenAI API key, and pip install a bunch of LangChain-related things until you can run the below code.

I tried this out with two different urls. The first is this paper Differential outcomes of Zika virus infection in Aedes aegypti orally challenged with infectious blood meals and infectious protein meals. There’s a few things I would like to be extracted.

- Mosquito: Ae. aegypti

- Virus: Zika virus (PRVABC59 strain)

- Experiment Type: No expectation here but curious to see how this gets classified

Here’s a result for the first url:

ChatGPT-3.5-turbo + UnstructuredURLLoader

[

{

"url": "https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0182386"

},

[

{

"virus_tested": "Zika Virus",

"virus_strain": "-",

"mosquito_species": "Culex pipiens quinquefasciatus",

"experiment_type": "Infectious bloodmeal",

"brief_summary": "Culex pipiens quinquefasciatus mosquitoes are not competent to transmit Zika Virus."

},

{

"virus_tested": "Yellow fever virus",

"virus_strain": "-",

"mosquito_species": "Aedes aegypti",

"experiment_type": "Mutagenesis analysis",

"brief_summary": "Mutations in the envelope protein of yellow fever virus can affect viral infectivity and dissemination in Aedes aegypti mosquitoes."

},

{

"virus_tested": "Yellow fever virus",

"virus_strain": "-",

"mosquito_species": "Stegomyia mosquitoes",

"experiment_type": "Virus titration",

"brief_summary": "A method for the titration of yellow fever virus in Stegomyia mosquitoes was developed."

},

{

"virus_tested": "Dengue virus",

"virus_strain": "-",

"experiment_type": "Blood meal-induced microRNA analysis",

"brief_summary": "Aedes aegypti mosquitoes produce microRNAs in response to feeding on blood infected with dengue virus, affecting downstream gene expression." }

]

]It’s picking up on experiments in the citations. But otherwise, the output is formatted correctly.

Here, I’ve tried it again but using the unstructured document loader on a .TXT file with just the Introduction, Materials/Methods, Results, and Discussion (no references or abstract):

ChatGPT-3.5-turbo + UnstructuredFileLoader

[

{

"url": "https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0182386"

},

[

{

"virus_tested": "ZIKV",

"virus_strain": "PRVABC59",

"experiment_type": "per os challenge",

"brief_summary": "Infection rates and dissemination rates between mosquitoes receiving infectious blood meals and infectious protein meals at comparable titers were compared." }

]

]Much better! But mosquito species is missing. This is the best result I’ve gotten out of the many settings tried.

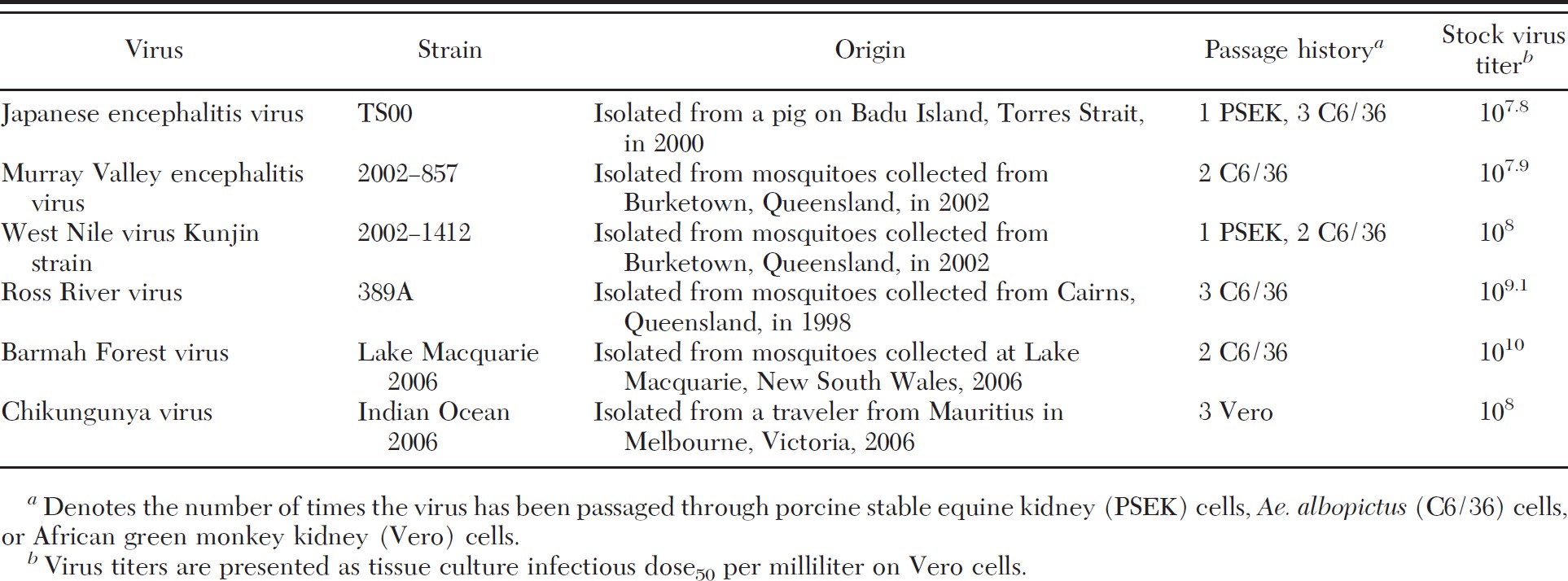

For the second paper Aedes albopictus (Diptera: Culicidae) as a Potential Vector of Endemic and Exotic Arboviruses in Australia, there are many different viruses being tested in Ae. albopictus. There is considerably more info to be picked up on. The essential virus info is in this .JPEG table - we’ll see how the model fairs.

Here’s a result for the second url:

text-davinci-003 + SeleniumLoader

"virus_tested": "Murray Valley Encephalitis and Other Queensland Arboviruses",\n

"virus_strain": "N/A",\n "mosquito_species": "Aedes Aegypti, Culex

Annulirostris and Other Mosquitoes (Diptera: Culicidae)",\n

"experiment_type": "Quantitative Studies",\n "brief_summary": "Found vector

competence of Aedes Aegypti, Culex Annulirostris and other mosquitoes with Murray

Valley Encephalitis and other Queensland Arboviruses"\n },\n {\n

"virus_tested": "Endemic Ross River Virus Transmission",\n "virus_strain":

"N/A",\n "mosquito_species": "N/A",\n "experiment_type": "N/A",\n

"brief_summary": "'It really did not pan out here. Couldn’t even parse to JSON. Here’s trying again with a .TXT form. I also had to use optical character recognition (OCR) to extract the text from the table containing the virus info:

ChatGPT-3.5-turbo + UnstructuredFileLoader

[

{

"url": "https://academic.oup.com/jme/article/51/3/661/901264"

},

[

{

"virus_tested": "Japanese encephalitis virus",

"virus_strain": "TS00 Strain",

"mosquito_species": "Aedes aegypti",

"experiment_type": "Susceptibility to infection, Growth kinetics, and Transmission",

"brief_summary": "Mosquitoes exhibited high rates of infection, dissemination, and transmission for JEV across all temperatures tested."

},

{

"virus_tested": "Murray Valley encephalitis virus",

"virus_strain": "2002-857 Strain",

"mosquito_species": "Aedes aegypti",

"experiment_type": "Susceptibility to infection, Growth kinetics, and Transmission",

"brief_summary": "No mosquitoes became infected with MVEV at any of the temperatures tested."

},

{

"virus_tested": "West Nile virus KUNV",

"virus_strain": "2002-1412 Strain",

"mosquito_species": "Aedes aegypti",

"experiment_type": "Susceptibility to infection, Growth kinetics, and Transmission",

"brief_summary": "Mosquitoes exhibited high rates of infection, dissemination, and transmission for WNV across all temperatures tested."

},

{

"virus_tested": "Ross River virus",

"virus_strain": "389A Strain",

"mosquito_species": "Culex annulirostris",

"experiment_type": "Susceptibility to infection, Growth kinetics, and Transmission",

"brief_summary": "Mosquitoes exhibited high rates of infection, dissemination, and transmission for RRV across all temperatures tested."

},

{

"virus_tested": "Barmah Forest virus",

"virus_strain": "Lake Macquarie 2006 Strain",

"mosquito_species": "Culex annulirostris",

"experiment_type": "Susceptibility to infection, Growth kinetics, and Transmission",

"brief_summary": "No mosquitoes became infected with BFV at any of the temperatures tested."

},

{

"virus_tested": "Chikungunya virus",

"virus_strain": "Indian Ocean 2006 Strain",

"experiment_type": "Susceptibility to infection, Growth kinetics, and Transmission",

"brief_summary": "Mosquitoes exhibited high rates of infection and dissemination for CHIKV across all temperatures tested, but transmission rates were low." }

]

]Well, that is better but a lot of the information (summary/mosquito) is inaccurate and I had to help it by putting the table information in text form. There are document loaders that can perform OCR on PDFs so that might be necessary to account for situations where essential information is contained in an image file table.

I’ve only shown a few results here but my overall findings are that the model being used obviously matters as does the loader. It also keeps trying to include cited papers in the output rather than just focusing on experiments performed in the paper. I tried adding a stipulation to the prompt to ignore cited papers but that didn’t seem to improve the results.

Either I am writing the prompts poorly or the models I tested aren’t quite there yet for this type of work (did not test ChatGPT-4). I suspect better results can be produced using existing models by messing around further with the code, namely breaking up queries, changing the wording of the prompt, and feeding it cleaner versions of papers (additional pre-processing steps). Implementing some sort of flagging system to distinguish good from bad results may be a way to benefit still, performing manual curation on only the bad ones.

Final Thoughts

There are many other data science projects in virology or science in general that could benefit from similar workflows. Scientists evaluate methodologies/model systems based on a hierarchy of evidence and AI-assisted extraction can help us contextualize experiments within that hierarchy.

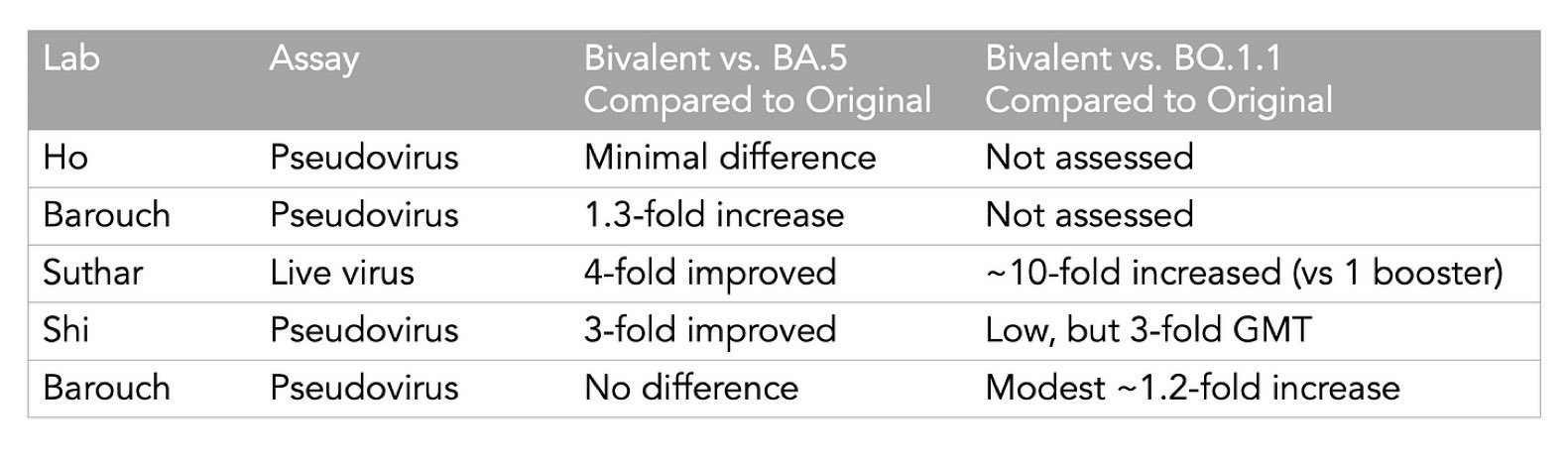

For example, Eric Topol posts these useful tables on SARS-CoV-2 neutralization studies by different groups categorizing them by the type of virus system used (pseudovirus, authentic virus, or attenuated virus). By integrating such evidence faster and on a larger scale, we’ll be able to build better living systematic reviews and other interactive reports.

I’ve made interactive research documents in the past. In this example, it’s semi-automated (mostly at the data collection, cleaning, and validation steps) with manual curation. Data extraction from semi- or unstructured text is by far the biggest time sink and I’ve been trying to alleviate this burden with AI tools for a few months. Every couple of months, I’ll test out the newest AI updates and while it gets closer and closer, it’s still not quite there yet for my use cases.