# A large amount of the code using litsearchr is derived from this tutorial

# https://claudiu.psychlab.eu/post/automated-systematic-literature-search-with-r-litsearchr-easypubmed/

# litsearchr isn't yet on CRAN, need to install from github

if (require(litsearchr))

remotes::install_github("elizagrames/litsearchr", ref = "main")

# Packages to load/install

packages <- c(

"easyPubMed",

"litsearchr", "stopwords", "igraph",

"ggplot2", "ggraph", "ggrepel", "dplyr", "httr"

)

# Install packages not yet installed

installed_packages <- packages %in% rownames(installed.packages())

if (any(installed_packages == FALSE)) {

install.packages(packages[!installed_packages])

}

# Load packages

lapply(packages, library, character.only = TRUE)

virus = "parechovirus"

term <- '(parechovirus[Title/Abstract] AND brain[Title/Abstract])'

pmid_list <- easyPubMed::get_pubmed_ids(term)

# Search

pm_xml <- easyPubMed::fetch_pubmed_data(pmid_list)

pm_df <- easyPubMed::table_articles_byAuth(pubmed_data = pm_xml,

included_authors = "first",

getKeywords = TRUE, max_chars = 2500)

as.data.frame(lapply(head(pm_df[c("pmid", "doi", "jabbrv", "keywords", "abstract")]), substr, start = 1, stop = 30))

#Find article types and remove review articles from considered articles

art_types = lapply(articles_to_list(pm_xml), function(x){custom_grep(x, "PublicationType", "char")})

combined_at <- sapply(art_types, paste, collapse = ", ")

pm_df = pm_df[which(!grepl("Review", combined_at)),]Automating Literature Searches in R (Part II)

TLDR: Sets up a recurring PubMed search that adapts to changes in fields over time by adjusting search terms. Adds papers to the reference manager Zotero where full text PDFs can be acquired if available. Downstream, AI can be used to analyze abstracts or full texts.

This is Part II on a series about applying AI for data science on scientific articles. See Part I: Virology Data Science with LangChain + ChatGPT. Stay tuned for future posts where we put it all together.

Intro

Looking to generate insights from an automatically updating set of academic papers using AI? Here’s code for a workflow that can adjust search terms as fields change, adding paper info, abstracts, and PDFs if available.

Workflow

AI tools for finding and summarizing academic papers exist but they typically provide limited options to scale across entire bibliographies and integrate with other tools. Coding with LangChain allows you to interrogate websites or files in bulk but it doesn’t help you find papers in the first place. Combining the R package ‘litsearchr’ and my favorite reference manager Zotero (courtesy of the ‘zoteror’ package), you can rapidly build up a set of documents (a corpus) on a topic of interest.

Giving AI the appropriate things to ingest is important! We want to build curated sources of information and extract the contained knowledge. But we also can use AI to summarize the context behind the knowledge, categorizing and reducing complex data into tidy variables for analysis. Analyzing metadata can reveal important trends in how a particular field evolves over time. Writing this in R means complete control and easy scheduling of code execution meaning we can build fully customizable, automatically updated dashboards based on a scientific topic. We can even use AI to help us code them or provide suggestions that help us make sense of trends.

Code

Let’s look at that R code:

This runs a PubMed search for specific search terms and returns the results in a neat data frame. I’m looking for papers about a respiratory virus parechovirus and its ability to infect the brain. I wasn’t interested in review articles so we also used the XML data from the search to identify and remove them from the results.

# Looking for additional terms that may be useful for lit search

# Extract terms from title text

pm_terms_title <- litsearchr::extract_terms(text = pm_df[,"title"],

method = "fakerake", min_freq = 3, min_n = 2,

stopwords = stopwords::data_stopwords_stopwordsiso$en)

# Extract terms from keywords

pm_terms_keywords <- litsearchr::extract_terms(keywords = trimws(unlist(strsplit(pm_df[,"keywords"], ";"))),

method = "tagged", min_freq = 3, min_n = 1, max_n = 5)

# Pool the extracted terms together

pm_terms <- c(pm_terms_title, pm_terms_keywords)

pm_terms <- pm_terms[!duplicated(pm_terms)]

pm_docs <- paste(pm_df[, "title"], pm_df[, "abstract"]) # we will consider title and abstract of each article to represent the article's "content"

pm_dfm <- litsearchr::create_dfm(elements = pm_docs, features = pm_terms) # document-feature matrix

pm_coocnet <- litsearchr::create_network(pm_dfm, min_studies = 3)

ggraph(pm_coocnet, layout = "stress") +

coord_fixed() +

expand_limits(x = c(-3, 3)) +

geom_edge_link(aes(alpha = weight)) +

geom_node_point(shape = "circle filled", fill = "white") +

geom_node_text(aes(label = name), hjust = "outward", check_overlap = TRUE) +

guides(edge_alpha = "none") +

theme_void()This bit is looking at all the abstracts and titles from the papers from our original search and suggesting additional terms that maybe helpful in expanding our search. The graph visualizes the “strength” of these terms:

We will use terms that pass a certain cutoff for a second search that can help us find additional relevant papers.

pm_node_strength <- igraph::strength(pm_coocnet)

pm_node_rankstrength <- data.frame(term = names(pm_node_strength), strength = pm_node_strength, row.names = NULL)

pm_node_rankstrength$rank <- rank(pm_node_rankstrength$strength, ties.method = "min")

pm_node_rankstrength <- pm_node_rankstrength[order(pm_node_rankstrength$rank),]

pm_plot_strength <-

ggplot(pm_node_rankstrength, aes(x = rank, y = strength, label = term)) +

geom_line(lwd = 0.8) +

geom_point() +

ggrepel::geom_text_repel(size = 3, hjust = "right", nudge_y = 3, max.overlaps = 30) +

theme_bw()

pm_plot_strength

# Impose a cutoff for informative terms

pm_cutoff_cum <- litsearchr::find_cutoff(pm_coocnet, method = "cumulative", percent = 0.8)

pm_cutoff_change <- litsearchr::find_cutoff(pm_coocnet, method = "changepoint", knot_num = 3)

pm_plot_strength +

geom_hline(yintercept = pm_cutoff_cum, color = "red", lwd = 0.7, linetype = "longdash", alpha = 0.6) +

geom_hline(yintercept = pm_cutoff_change, color = "orange", lwd = 0.7, linetype = "dashed", alpha = 0.6)

pm_cutoff_crit <- pm_cutoff_change[which.min(abs(pm_cutoff_change - pm_cutoff_cum))] # e.g. nearest cutpoint to cumulative criterion (cumulative produces one value, changepoints may be many)

pm_selected_terms <- litsearchr::get_keywords(litsearchr::reduce_graph(pm_coocnet, pm_cutoff_crit))

pm_selected_terms

# Terms you want to exclude from being used in follow up search

age_spec = c("child", "adult", "elderly","infant", "newborn","viral")

pm_selected_terms = pm_selected_terms[-which(pm_selected_terms%in%age_spec)]

#LLM call to group selected terms automatically

#Do not share your OpenAI API key

api_key <- "<replace with your own key>"

response <- POST(

# curl https://api.openai.com/v1/chat/completions

url = "https://api.openai.com/v1/chat/completions",

# -H "Authorization: Bearer $OPENAI_API_KEY"

add_headers(Authorization = paste("Bearer", api_key)),

# -H "Content-Type: application/json"

content_type_json(),

# -d '{

# "model": "gpt-3.5-turbo",

# "messages": [{"role": "user", "content": " QUERY "}]

# }'

encode = "json",

body = list(

model = "gpt-3.5-turbo",

messages = list(list(role = "user",

content = paste0("Sort these terms provided into three groups. When these three groups are mixed and match, they should produce search terms for find documents related to", term, ". Only output the three groups. Terms:", paste(pm_selected_terms, collapse = ", ") ) ))

)

)

chatGPT_answer <- content(response)$choices[[1]]$message$content

# Split the string into different groups

groups <- strsplit(chatGPT_answer, "\n\n")[[1]]

# Initialize empty vectors for each group

group1 <- vector()

group2 <- vector()

group3 <- vector()

# Loop through each group and split into individual items

for (group in groups) {

items <- strsplit(group, "\n- ")[[1]][-1] # Exclude the group title

# Assign items to corresponding groups

if (grepl("^Group 1", group)) {

group1 <- items

} else if (grepl("^Group 2", group)) {

group2 <- items

} else if (grepl("^Group 3", group)) {

group3 <- items

}

}

#Create search query

pm_grouped_selected_terms <- list(

group1 = group1,

group2 = group2,

group3 = group3

)

updated_search = litsearchr::write_search(

pm_grouped_selected_terms,

languages = "English",

exactphrase = TRUE,

stemming = FALSE,

closure = "left",

writesearch = FALSE

)

#Repeat search and add results to original search

pmid_list_up <- easyPubMed::get_pubmed_ids(updated_search)

# Search

pm_xml_up <- easyPubMed::fetch_pubmed_data(pmid_list_up)

pm_df_up <- easyPubMed::table_articles_byAuth(pubmed_data = pm_xml_up,

included_authors = "first",

getKeywords = TRUE, max_chars = 2500)

art_types = lapply(articles_to_list(pm_xml_up), function(x){custom_grep(x, "PublicationType", "char")})

combined_at <- sapply(art_types, paste, collapse = ", ")

pm_df_up = pm_df_up[which(!grepl("Review", combined_at)),]

#Combining the original and updated search results, no duplicate entries

#Removing empty lines

pm_df_final = rbind(pm_df, pm_df_up[-which(pm_df_up$doi %in% pm_df$doi),])

pm_df_final <- pm_df_final[complete.cases(pm_df_final$title, pm_df_final$doi), ]We curated those search terms, both using a cutoff and by eliminating specific terms that might be too general for our purposes. We even called ChatGPT within R to help us group these terms to make more coherent search queries (you’ll need your OpenAI API key for that or you can just group these manually). We then re-ran the PubMed search and combined the results from the two searches.

#Much of this can be find in the documentation for the zoteror package:

#https://github.com/giocomai/zoteror

#Zotero r

if(!require("remotes")) install.packages("remotes")

remotes::install_github(repo = "giocomai/zoteror")

library(zoteror)

#Do not share your keys and user IDs publicly

zuser = <replace with your own user id #>

zkey = "<replace with your own key>"

zot_set_options(user = zuser, credentials = zkey)

#Make a collection for the new search - if it already exists, just save the key

colkey <- zot_create_collection(collection_name = paste0(virus,"-litsearchr"))

#Make a Zotero API request to avoid adding duplicate items already in the collection

params <- list(postId = 1)

# Make the API request and extract the response content

# API request goes to the collection and returns info about items inside

# This is using the httr package

apiquery=paste0("https://api.zotero.org/users/", zuser,"/collections/",colkey, "/items?key=",zkey)

response <- GET(apiquery, query = params)

response_content <- content(response, "text")

#Extract DOIs

doi_matches <- gregexpr("\\d+\\.\\d+/\\S+", response_content)

# Extract the matched substrings and store them in a vector

dois <- regmatches(response_content, doi_matches)[[1]]

dois <- gsub("\\\",", "", dois)

# Remove papers already in collection from papers to add using DOI as a unique identifier

pm_df_final = pm_df_final[-which(pm_df_final$doi%in%dois),]

# Add remaining papers to the collection

add_df=data.frame()

for(i in 1:nrow(pm_df_final)){

pmsearch <-

tibble::tribble(~itemType, ~creators, ~title, ~tags, ~DOI, ~publicationTitle, ~abstractNote, ~date,

"journalArticle", paste0(pm_df_final$lastname[i],", ",pm_df_final$firstname[i]), pm_df_final$title[i], pm_df_final$keywords[i], pm_df_final$doi[i], pm_df_final$journal[i], pm_df_final$abstract[i], paste0(pm_df_final$year[i],"-",pm_df_final$month[i],"-",pm_df_final$day[i]))

pmsearch_zot <-

pmsearch %>%

mutate(creators = zot_convert_creators_to_df_list(creator = creators),

tags = zot_convert_tags_to_df_list(tags = tags))

add_df=rbind(add_df,pmsearch_zot)

}

zot_create_items(item_df = add_df,

collection = paste0(virus,"-litsearchr"))

#For how to schedule this script to run periodically,

#see https://beamilz.com/posts/series-gha/2022-series-gha-2-creating-your-first-action/en/

#or https://cran.r-project.org/web/packages/taskscheduleR/vignettes/taskscheduleR.html

#or https://solutions.posit.co/operations/scheduling/In order to make this work with your Zotero account, we need to replace a few of the variables in the code. To find you Zotero details, login to your account on their website and go to Settings. From there, go to Feeds/API. Your user id (zuser) should show up in the text “Your userID for use in API calls is XXXXX”. To get an API key, click Create new private key. Make a key that has all access options for your personal library and read/write permissions. Then, paste that key into zkey. Now, we can add the found papers to your Zotero library.

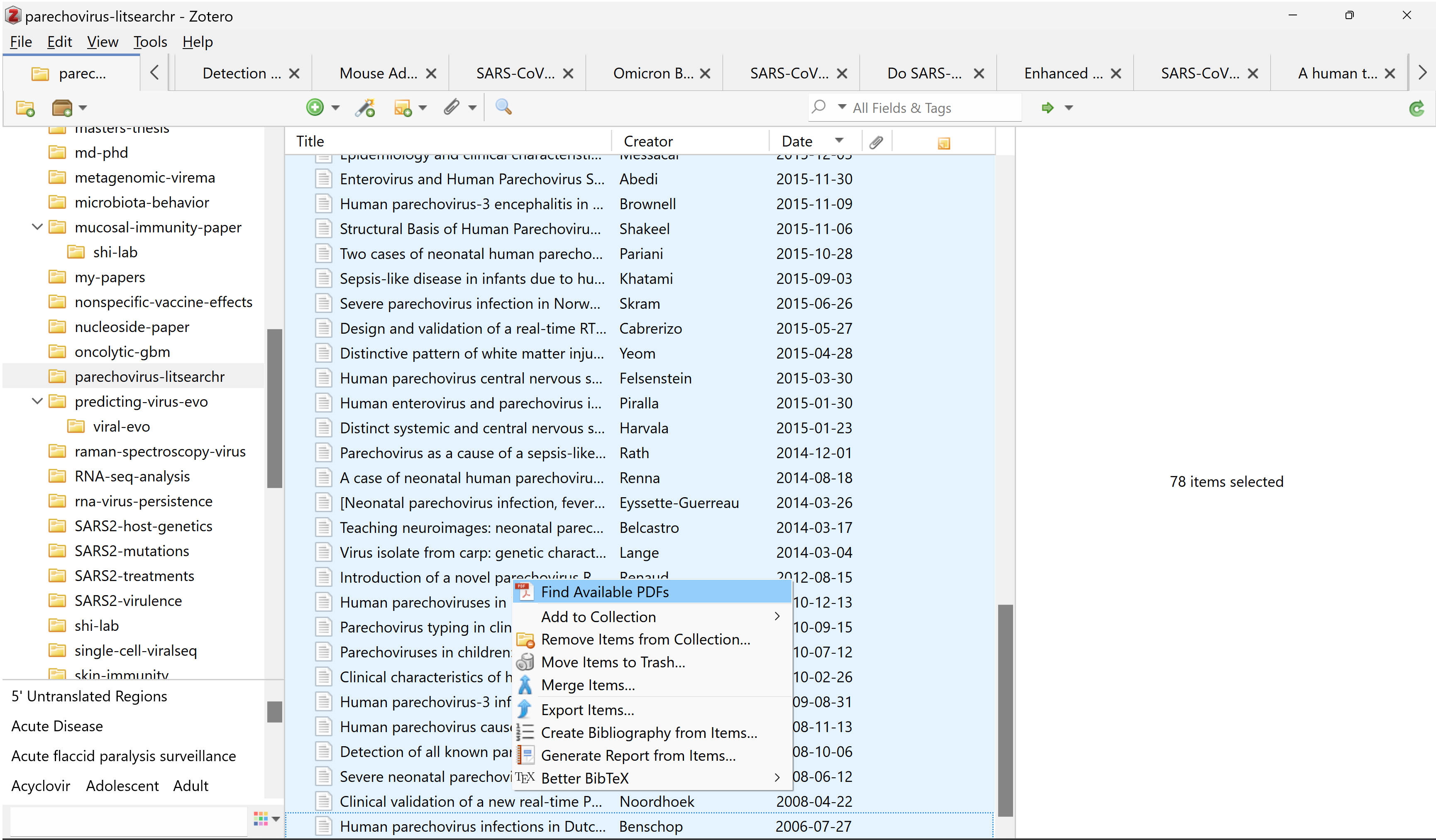

After running the code, the papers should be added to your library and you should be able to inspect the data in the Zotero desktop application. We can use the desktop app to find available full-text PDFs and download them locally.

This process typically can’t find available PDFs for all papers but it usually works for the majority of them. This process also takes quite a bit of time and can fill up disk space quickly if you aren’t paying attention.

Final Thoughts

Now, we have a stream of curated papers organized around a topic. Next, I’ll build an R Shiny dashboard to monitor our AI-driven outputs on this data stream, where we can inspect data and observe trends. Finally, we are going to see how big we can scale this. Can we use AI to perform huge data science studies on scientific articles? We’re going to piece it all together in future posts.